AI Monitoring

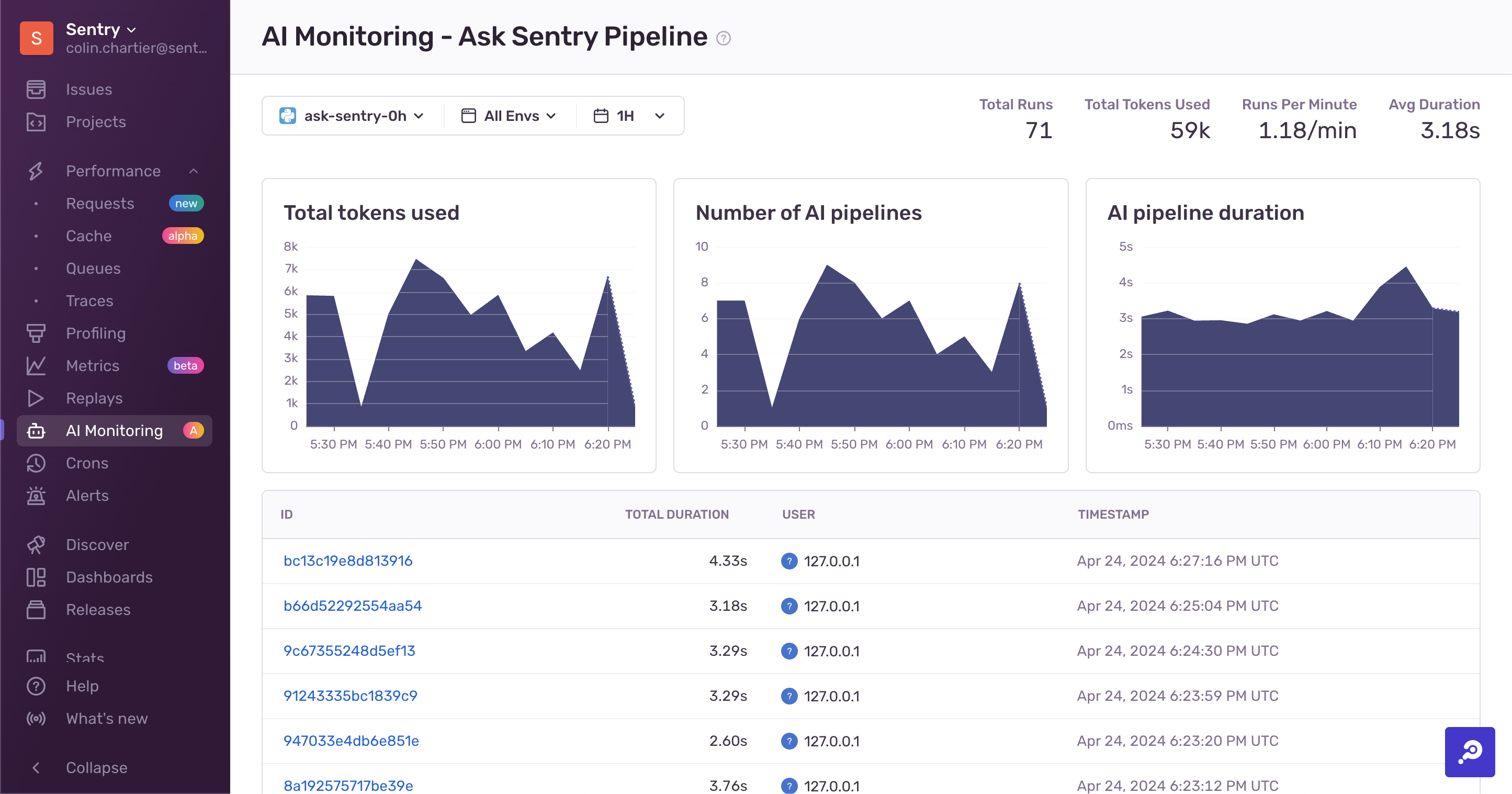

Sentry AI monitoring helps you understand your LLM calls.

This feature is currently in Alpha. Alpha features are still in-progress and may have bugs. We recognize the irony.

Sentry's AI Monitoring tools help you understand what's going on with your AI pipelines. They automatically collect information about prompts, tokens, and models from providers like OpenAI.

- Users are reporting issues with an AI workflow, and you want to investigate responses from the relevant large language models.

- Workflows have been failing due to high token usage, and you want to understand the cause of the higher token usage.

- Users report that AI workflows are taking longer than usual, and you want to understand what steps in a workflow are slowest.

To use AI Monitoring, you must have an existing Sentry account and project set up. If you don't have one, create an account here.

- Learn how to set up Sentry's AI Monitoring.

Help improve this content

Our documentation is open source and available on GitHub. Your contributions are welcome, whether fixing a typo (drat!) or suggesting an update ("yeah, this would be better").

Our documentation is open source and available on GitHub. Your contributions are welcome, whether fixing a typo (drat!) or suggesting an update ("yeah, this would be better").